Over the past year, UC Irvine’s School of Humanities has positioned itself at the forefront of artificial intelligence engagement in higher education, taking a distinctively humanistic approach to understanding and shaping generative AI’s role in academia.

The School has tackled the challenges and opportunities AI presents through multiple initiatives – from pioneering policy development to hands-on experimentation with graduate students and hosting conferences with industry experts.

“It is in this human dimension that the impacts of AI matter most,” observed Tyrus Miller, dean of the School of Humanities and Distinguished Professor of English and art history, in a 2023 essay about AI. This perspective has guided the School’s multifaceted approach as it works to chart a course for generative AI integration grounded in humanistic values and critical thinking.

Developing frameworks

In 2024, the School of Humanities formed a Generative AI Workgroup, which brought together faculty members Qian Du, Amalia Herrmann, Bradley Queen, Braxton Soderman and Julio Torres to assess and develop comprehensive resources for integrating generative AI into humanities education.

Their efforts culminated in two key resources: a dedicated website providing practical resources for faculty, and White Paper 1.0, which offers a comprehensive framework for understanding and implementing AI in humanities teaching and research. “The humanities must be involved in critical conversations surrounding the social, political and cultural impacts of AI,” the paper notes, highlighting the unique position humanities scholars hold in understanding AI’s human dimensions.

The document outlines three guiding principles: grounding generative AI integration in specific disciplinary objectives; promoting student-centered critical thinking; and encouraging diverse perspectives on issues like bias, ethics and accessibility.

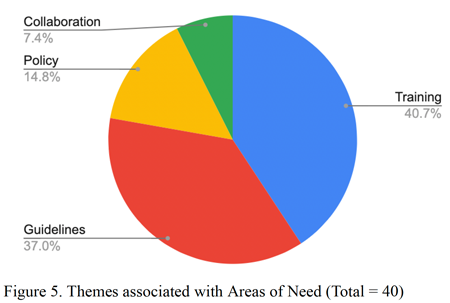

Drawing from a survey they administered, the workgroup found that faculty approaches to AI varied widely – from complete prohibition to selective integration. What emerged clearly was a desire for more guidance, with 40% of respondents requesting additional training and 37% seeking clearer guidelines for classroom implementation.

The white paper also provides specific guidance for different course types. In writing courses, it outlines ways to use AI as a tool for enhancing critical analysis and reflection, such as having students evaluate and revise AI-generated writing. For language courses, it suggests practical applications like using AI for instant feedback and conversation practice. Throughout, the emphasis remains on thoughtful integration that serves core humanities learning goals.

Complementing these early efforts, Associate Professor of Teaching Qian Du and Professor of English Daniel M. Gross recently expanded on these ideas in a Times Higher Education article, exploring how generative AI challenges traditional notions of writing, voice and academic expression.

Experimenting in the classroom

As part of the humanities’ exploration of AI in education, Dean Miller pilot-tested an experimental AI “bot” tool in his Fall 2024 graduate seminar, “Modernism and Avant-Garde.” The course weaved generative AI engagement into its study of 20th-century art and visual culture, using the technology as both an aid for managing a large body of scholarly literature and a subject of critical inquiry itself.

“We want to raise AI usage to a level of explicitness in the classroom, rather than a background use-scenario that is either approved or unapproved,” explained Dean Miller in an article chronicling the new tool, ClassChat.

The course incorporated structured AI experiments alongside traditional art historical study. Students were required to complete three “AI reflection” assignments throughout the quarter, documenting their interactions with ClassChat and critically evaluating the tool’s capabilities and limitations in art historical research and instruction. The course encouraged experimentation with generative AI – students could use AI tools in their assignments but had to document their use and attribute any AI-generated content accordingly.

While the tool demonstrated some success for managing course knowledge, the class concluded that current AI tools need significant development to meet the demands of graduate-level art history, particularly as it deals with both textual and visual analysis. Following this pilot course, UCI will continue to refine the ClassChat tool for use across different disciplines, including future humanities courses if faculty choose to utilize it.

Forming thought partners

The School of Humanities co-hosted two major conferences last year, bringing together scholars and industry professionals to explore AI’s implications from different angles.

In May 2024, alongside the UC Humanities Research Institute (UCHRI), the School co-hosted “The AI Paradigm: Between Personhood and Power,” a two-day symposium that examined AI as a cultural phenomenon that influences our ideas, beliefs, narratives and social practices.

Scholars from across the UC system examined topics ranging from AI’s impact on writing and authorship to its implications for university infrastructure and student rights. UCI faculty members explored issues like plagiarism detection, student rights and data bias. Moving beyond technical concerns, discussions addressed fundamental questions about AI’s role in shaping academic work, student learning and institutional practices.

Earlier in the year, the School partnered with UCI Beall Applied Innovation to present “AlphaPersuade: A Summit on Ethical AI.” The event brought together industry leaders and academics to develop an ethical framework for AI’s growing persuasive capabilities.

Drawing on principles of democratic rhetoric, the summit aimed to help organizations understand and responsibly manage AI’s potential to influence human behavior and belief, while addressing challenges like disinformation, bias and AI hallucinations.

Along with keynotes from AI experts, attendees engaged in interactive workshops and roundtable discussions exploring practical applications in tooling, pedagogy and research partnerships.

The future of AI in the humanities

Thanks to the groundwork laid by last year’s initiatives, the School of Humanities is preparing to continue its engagement with AI.

Its most important initiative, still in an exploratory phase, is to offer a minor in AI along with partners in Donald Bren School of Information and Computer Sciences and the Beall Center for Applied Innovation. It would serve students across multiple disciplines and majors, from the humanities, arts and social sciences to the natural sciences, engineering, health sciences and business.

Students pursuing the minor would be introduced to a range of practical, social, ethical and aesthetic dimensions of this emerging technology. The minor would certify that UCI students have gained AI knowledge and skills critical for today’s work world.

Even more importantly, perhaps, the humanities-based courses would equip them to think more deeply about AI’s impact on social and cultural life and become more reflective users of a technology that is becoming ever more pervasive.

While many practical conversations and a Senate approval process lie ahead before the minor becomes a reality, we are hopeful that we can offer UCI students this exciting opportunity in the near future.

Interested in reading more from the School of Humanities? Sign up for our monthly newsletter.